This post was inspired by a bit of musing as to what would happen if PIs tried to crowd-source parts of their proposals. The obvious answer, to us at least, was that we would almost certainly, and immediately, receive a proposal titled “Granty McGrantface.” We’re presuming you are familiar with the reference; but if not, see these links. While the saga of our friends at NERC turned our pretty well, it reminded us of two things: 1) asking the internet to decide for you is a risky proposition, and (the focus of this post) 2) that no matter our intentions, some of the stuff[i] we do, or that stems from the funding we provide to you, will get noticed by a wide audience. Most stuff tends to go unnoticed, but from time to time something goes viral.

Therefore: What you choose to call your project matters.

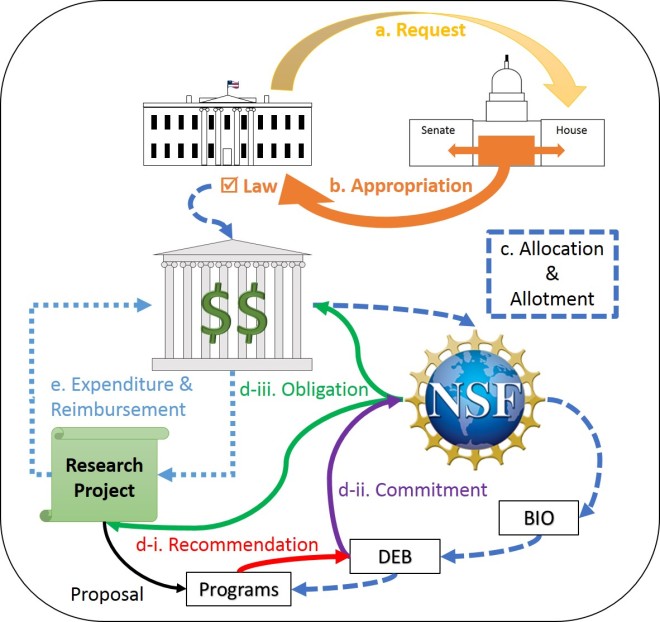

Why the project title matters to NSF

The project title is the most meaningful and unique piece of your proposal that carries over to the public award description. Everything else in your proposal is distilled and condensed down to a couple paragraphs of “public abstract” and a few dozen metadata records available via the NSF award search and research.gov[ii]. Consider, too, the project title is a part of your proposal for which NSF takes responsibility and exercises editorial power. We can, and sometimes do, change project titles (about a quarter are changed, mostly for clarity – such as writing out abbreviations.)

Why the project title matters to you

The project title and PI info are the only things most potential reviewers will ever see before deciding whether to review your proposal. The title is your first (and typically only) shot to communicate to a reviewer that your proposal is interesting and worth their time to review[iii]. And as we said above, if your proposal gets funded, the title gets posted on the NSF public awards website along with the PI name and institution.

You can (and should) provide effective project titles

When you receive an award, the title will be searchable by anyone and permanently associated with your name. Over the years, we’ve seen a vast array of proposal titles. We’ve also seen how they affect the audiences (reviewers, panels, and public) who read or hear them. Based on the accumulation of observations and experiences in DEB, we’ve put together these 8 tips to consider when composing your project titles.

Keep in mind: The following are not any sort of universally enforced rules or NSF policy. The proposal title is initially your responsibility, but as we said, once it comes into NSF, we can edit it as needed. Ultimately, what makes a good title is subjective and is probably not constant across disciplines or over time. These are just some broad and general tips we hope you’ll find helpful.

Tip 1: Know your broader audiences

Reviewers, including panelists, are specialists, but not necessarily from the same sub-sub-specialty as you. Public readers of award titles cover an even wider range of knowledge and expertise. These are the people who are going to read that title and make a decision whether to take action. Reviewers will, first, decide whether or not to read, and then, whether or not to support your proposal. The public will decide whether to read your award abstract, and the media will decide whether to contact you.

There are both good and bad potential outcomes of public attention. It can seem like a strong, scientifically precise, and erudite proposal title might inform and impress readers. But that misses half the point: it’s not simply about avoiding misunderstanding. Instead, a good title is a vehicle for audience engagement; it seeks to cultivate positive responses. This happens when you use straight-forward, plain language, minimizing jargon and tech-speak, with a clear message. The rest of these tips are basically more specific examples of ways to do this.

Tip 2: Write to your (proposal’s) strengths

Most of us feel some twinge of annoyance when we see a misleading headline or publication title, e.g. “Transformative Biology Research to Cure All Diseases.” This is your chance to get it right! Don’t bury the lede. Focus your title on the core idea of the proposal. In many cases, details like the organism, the location, or the specific method are secondary[iv]; if you include them, do so carefully, in supporting roles and not swamping the central conceptual component[v]. If you wrote your title before your proposal, it’s a good idea to come back around to it before hitting submit.

Tip 3: Using Buzzwords #OnFleek

It’s a bit cliché to say this, but it bears mention: don’t tell us your project is great, demonstrate it. That is what the project description is for. We like “transformative” and “interdisciplinary” projects, but placing those words in your title doesn’t imbue your project with those qualities. Similarly, loading up on topical or methodological buzzwords (“*omics”, “CRISPR”, etc.) adds little when the major consideration is the knowledge you’re seeking to uncover, not the shiny new tool you want to wield or the loose connection to a hot topic. The space you save by dropping this extra verbiage can allow you to address other important aspects of your project.

Tip 4: Acronyms

They save space in your title. And, NSF seems to have them all over the place (It’s an ARE: Acronym Rich Environment). So, why not use them, right? Well…, tread carefully.

The various title prefixes (e.g. RUI, CAREER) we ask for are used by us to 1) ensure reviewers see that special review criteria apply and 2) check that we’ve applied the right processing to your proposal. They’re often acronyms because we don’t want to waste your character count. So, we want those on your proposals[vi] but, after merit review, we may remove them before making an award. Other acronyms added by you tend to fall into two categories:

- Compressed jargon- for example, “NGS” for Next Generation Sequencing. When you don’t have the whole proposal immediately behind it, an acronym in your title may never actually be defined in the public description and it may imply something unintended to some in the audience.

- Project-name shorthand- There are perhaps a handful of projects that through longevity and productivity have attained a degree of visibility and distinctiveness that allows them to be known by an acronym or other shorthand within the particular research community. Even if your project has achieved this distinction, remember that your audience goes beyond your community: not everyone will know of it. Further, trying to create a catchy nickname for a project (or program) usually doesn’t add anything to your proposal and can lead to some real groan-inducing stretches of language.

Tip 5: Questions to consider

How will reviewers respond to a title phrased as a question? Is the answer already an obvious yes or no? If so, why do you need the proposal and more money? Is this question even answerable with your proposed work? Is this one of the very rare projects that can be effectively encapsulated in this way?

Tip 6: Attempted humor

This can work; it may also fall flat (see above entry on “Questions”). It can, to some audiences, make your project seem unprofessional and illegitimate. That is a sizeable risk. It used to be, and still is to some extent, a fairly common practice to have a joke or cartoon in your slide deck to “lighten the mood” and “connect with your audience”. If you’ve ever seen a poor presenter do this, you know it’s not a universally good thing. With a proposal title, it’s always there and doesn’t get buried under the rest of the material as might happen with a slide. The alternative is to skip the joke and write something that connects to your reader through personality and creativity instead. This can be hard to do, but practice helps. For example, “I Ain’t Afraid of No Host: The Saga of a Generalist Parasite” was a funny, at least to us, title we made up – but will everyone reading it think it is funny, and does it help the grant that the title is funny? It isn’t very informative – again, tread lightly.

Tip 7: Latin vs Common terms

Per tip 2, you may not always list an organism in your project title; but when you do, make it accessible. The Latin name alone places a burden of prior knowledge or extra work on readers. It is a courtesy to public readers (not to mention your own SRO who may be filling out paperwork about your proposal and also to panelists who may be far afield from your system and unfamiliar with your organism) to add a common name label too. But, be careful. Some common names are too specific, jargon-y, or even misleading for a general audience. You don’t want, for instance, someone to see “mouse-ear cress” for Arabidopsis thaliana and think you’re working on vertebrate animal auditory systems (this has happened![vii]).

Tip 8: Thoughtful Word Choice

This tip expands the idea of confusing language, which we already pointed out regarding Latin names and acronyms, to avoiding jargon in general. Some jargon is problematic just because it is dense; as with Latin names and acronyms, this sort of jargon can be addressed by addition of or replacement with common terms. Other jargon is problematic because the audience understands it, but differently than intended. Meg Duffy over at Dynamic Ecology had a post on this some time back in the context of teaching and communication. These issues arise in proposals too. There are some very core words in our fields that don’t necessarily evoke the same meaning to a general audience or even across fields. The most straightforward example we can point to is our own name: the “E” in DEB stands for “environmental.” To a general audience environmental is more evocative of “environmentalism,” “conservation,” recycling programs, and specific policy goals than it is of any form of basic research[viii]. Addressing this sort of jargon in a proposal title is a bit harder because the word already seems common, and concise alternative phrasings are hard to come by.

For jargon, it might benefit you to try bouncing your title off of a neighbor, an undergrad outside your department, or an administrator colleague. In some cases, you might find a better, clearer approach. In others, maybe there’s not a better wording, but at least you are more aware of the potential misunderstandings.

Final Thoughts

Most of the project titles that we see won’t lead to awards and will never be published; and even if an award is made, most of their titles attract little notice. A few, however, will be seen by thousands or be picked up by the media and broadcast to millions. Thus, the title seems like a small and inconsequential thing, until it’s suddenly important. Because of this, even though the project title is a small piece of your proposal, it is worthy of attention and investment. We have provided the tips above to help you craft a title that uses straight-forward, plain language, to convey a clear and engaging message to your audiences.

We can’t avoid attention. In fact, we want to draw positive attention to the awesome work you do. But audience reactions are reliably unpredictable. The best we can do is to make sure that what we’re putting out there is as clear and understandable as possible.

[i] Anything related to research funding from policies on our end to research papers to tweets or videos mentioning projects.

[ii] At the close of an award, you are also required to file a “Project Outcomes Report” via Research.gov. This also becomes part of the permanent project record and publicly visible when your work is complete. We don’t edit these.

[iii] For the “good titles” argument as applied to research papers, see here: https://smallpondscience.com/2016/10/19/towards-better-titles-for-academic-papers-an-evaluative-approach-from-a-blogging-perspective/

[iv] There are obvious exceptions here, like a proposal for a targeted biodiversity survey in a geographical region.

[v] For what it’s worth, this is a common “rookie mistake” even before writing a proposal. We get lots of inquiries along the lines of “do you fund studies on organism X” or “in place Y”. The short answer is yes, but it’s often irrelevant because that doesn’t differentiate DEB from MCB or IOS or BioOCE. We don’t define the Division of Environmental Biology by organisms, or places, or tools, or methods. We define it by the nature of the fundamental questions being addressed by the research.

[vi] Some prefixes are mutually exclusive of one another. For example, CAREER and RUI cannot both be applied to the same proposal (http://www.nsf.gov/pubs/2015/nsf15057/nsf15057.jsp#a16).

[vii] Better alternatives might have been “plant”, “wild mustard”,

[viii] And yes, we do get the same sorts of calls and emails about “sick trees”, “that strange bird I saw”, “what to do about spiders,” etc. as you do.